AI art generators have come a long way in recent years, and it’s now possible to create some truly stunning pieces of art using them.

In this blog post, we’ll be comparing three different AI art generators – Dall-E 2, Midjourney, and Stable Diffusion – to see which one produces the best results of these 3 Generative AI applications.

We’ll be running the same three prompts through all of them and seeing which one comes out on top. So let’s get started!

Also check out my newest comparison test between Midjourney V4 and Stable Diffusion 2.0.

Read more, or watch the YouTube video:

YouTube:

Comparing 3 Different AI Art Generators

In this test we will compare results from the 3 most popular AI Art generators. Stable Diffusion, Midjoruney and Dall-E 2. Here is a bit of information about each generator:

Stable Diffusion

Stable Diffusion is a text-to-image model that gradually builds a coherent image from a noise vector by gradually modifying it over a number of steps.

The model was trained using the LAION Aesthetics dataset, a subset of the LAION 5B dataset, containing 120 million image-text pairs from the complete set which contains nearly 6 billion image-text pairs.

Stable Diffusion reportedly runs on less than 10 GB of VRAM at inference time, generating 512×512 images in just a few seconds, meaning running on consumer GPUs is an option.

The Stable Diffusion model is best for digital art design and very creative and abstract drawings. Overall, it’s much faster and more efficient than DALL·E. First of all, the carbon footprint is smaller.

Second, this model can be used by anyone with a 10 gig graphics card. It can be run in a few seconds, and doesn’t require as much hardware. Stable Diffusion is open source. This means that anyone can view and modify the source code for the software. Additionally, open source software is typically free to use.

Midjoruney

Midjourney is a new AI-powered tool that can turn any text into a piece of artwork. The resulting art from Midjourney is unique and can be quite breathtaking. The company behind Midjourney is a research lab that explores new ways of thinking and expands the imaginative powers of the human species.

How does Midjourney work?

Midjourney works by inputting a text prompt into a text encoder. This text encoder is trained to map the prompt to a representation space. Next, a model called the prior maps the text encoding to a corresponding image encoding that captures the semantic information of the prompt contained in the text encoding.

Finally, an image decoder stochastically generates an image which is a visual manifestation of this semantic information.

In other words, Midjourney takes a text prompt and uses it to generate an image.

Is Midjourney free?

Yes, Midjourney AI has a free tier that allows users to generate 25 images. After that, users must subscribe to one of two paid plans in order to continue using the service.

Dall-E 2

DALL-E 2 is a computer program created by OpenAI that uses artificial intelligence to generate images from text descriptions. It is the successor to the original DALL-E program, which was first introduced in 2014.

The program works by first recognizing specific aspects of an image from a text description, and then gradually altering a pattern of random dots to create the image. The program is also able to make edits to existing images, and create new images based on the original.

Finally, the program is also able to create different variations of an image, inspired by the original. DALL-E 2 is a significant improvement over its predecessor, as it is able to generate 4x better resolution images than DALL-E.

Additionally, the program has been designed to prevent the generation of harmful images, such as those depicting violence, hate, or nudity.

Stable Diffusion vs Midjourney vs Dall-E 2 Comparison Test

This is the results of the comparison test between Stable Diffusion, Midjourney and Dall-E 2.

Of course these results are just based on my opinion, so there is a lot of room for interpretation here.

But let’s just have a look at the results and leave a comment if you would have picked something else.

Test 1:

The first comparison test between Stable Diffusion, Midjourney and Dall-E 2 was a portrait image of a young woman. The prompt I used to create these AI art images was:

portrait of a pretty young woman, blue eyes, make-up, dark , soft light, cinematic, 8k

My conclusion of test 1:

Left: Midjoruney

Middle: Dall-E 2

Right: Stable Diffusion

I found the portrait images to be of excellent quality overall. I was most impressed with the portrait generated by Dall-E 2, which I thought looked very realistic and symmetrical. I also liked the hair and eyes in this image.

The portrait from Stable diffusion was also very good, although I did not like the eyes as much. I thought the Midjourney portrait was good as well, but I did not like the eyes in this one either.

So that’s one point for Dall-E 2.

Test 2:

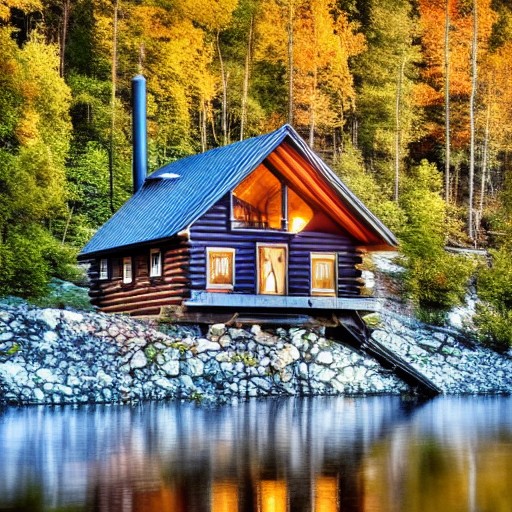

The second comparison test between Stable Diffusion, Midjourney and Dall-E 2 was a landscape image of a Norwegian fishing cabin. The prompt I used to create these AI art images was:

a Beautiful riverside fishing cabin in Norway, Cottagecore, Cottage, Lodge, Hyperdetailed, Autumn, stunning natural scenery, forest, Landscape, cinematic lighting, raking sunlight, sunrise, glade, intricate detail,picturesque, lovely, optics, shadows, storybook illustration

My conclusion of test 2:

Left: Dall-E 2

Middle: Stable Diffusion

Right: Midjourney

I found the landscape images to be of excellent quality overall. I was most impressed with the landscape generated by Dall-E 2, which I thought captured the feeling of a cabin in Norway perfectly.

I did like the mountains in the background of the Midjoruney image. The landscape from Stable diffusion was also very good, although I thought it was a bit too realistic. I liked the Midjourney cabin style as well, but I thought it was a bit too busy.

So quite an easy win again from Dall-E 2.

Test 3:

The third comparison test between Stable Diffusion, Midjourney and Dall-E 2 was an oil painting image of a Unicorn running on fluffy clouds. The prompt I used to create these AI art images was:

Unicorn running through fluffy clouds, dynamic, movement, beautiful oil painting, fine art, award-winning art, beautiful lighting, intricate detail

My conclusion of test 3:

Left: Stable Diffusion

Middle: Dall-E 2

Right: Midjourney

I found the unicorn images to be a bit lower quality overall, compared to the other two categories. I was most impressed with the unicorn generated by Stable diffusion, which I thought looked very realistic. I also liked the colors in this image.

The unicorn from Midjourney was also good, although I thought the horns were a bit weird. I liked the Dall-E 2 unicorn as well, it had the best body proportions and wings. But I found it a bit boring.

So the winner here was Stable Diffusion.

Conclusion

Overall, I thought that Dall-E 2 produced the best results in this comparison test. I was most impressed with the images it generated in the portrait and landscape categories.

In terms of speed and efficiency, Stable Diffusion was the best AI art generator. Midjourney did not win any of the tests, but I performed well in all categories.

So my conclusion is that all of these AI Art generators are good choices, it is just down to your personal preference and what you’re looking for.

Best view i have ever seen !

[url=https://augmentin.skin/]augmentin canadian pharmacy[/url]