AI art is a new and exciting field that is only just beginning to be explored. One of the most impressive and interesting examples of AI art is the Stable Diffusion model.

This model is able to generate images that are remarkably coherent and creative, all from a simple text prompt.

What’s even more impressive is that the Stable Diffusion model is open source. We go into more detail about how the model works and how you can use it to generate your own images.

What is stable diffusion AI?

Stable Diffusion is a text-to-image model that gradually builds a coherent image from a noise vector by gradually modifying it over a number of steps.

The model was trained using the LAION Aesthetics dataset, a subset of the LAION 5B dataset, containing 120 million image-text pairs from the complete set which contains nearly 6 billion image-text pairs.

LAION datasets are developed to be freely accessible for the promotion of a democratized AI development environment. Stable Diffusion reportedly runs on less than 10 GB of VRAM at inference time, generating 512×512 images in just a few seconds, meaning running on consumer GPUs is an option.

The Stable Diffusion model is best for digital art design and very creative and abstract drawings.

Overall, it’s much faster and more efficient than DALL·E. First of all, the carbon footprint is smaller. Second, this model can be used by anyone with a 10 gig graphics card. It can be run in a few seconds, and doesn’t require as much hardware.

Is Stable Diffusion open source?

Yes, Stable Diffusion is open source. This means that anyone can view and modify the source code for the software. Additionally, open source software is typically free to use.

Being open source means that Stable Diffusion is free for anyone to download, use, and modify. The source code for the software is publically available, so anyone can contribute to the project or make their own edits.

This makes it an ideal choice for those who want to create custom text-to-image generators. A very useful tool for Generative AI creative work.

How does Stable Diffusion work?

Stable Diffusion is a latent diffusion model that uses a technique called CLIP (Contrastive Language–Image Pre-training) to learn statistical associations between words and images.

Through training, the model learns to recognize familiar shapes in a field of pure noise, and then gradually brings those elements into focus if they match the words in the prompt.

The model is trained on a data set of images with accompanying metadata (such as alt tags and captions) using a bank of high-end GPUs. During the training process, the model associates words with images thanks to the CLIP technique, which allows the model to make inferences and style combinations that seem very intelligent.

Once the model is trained, it can be used to generate new images by providing a text prompt. The model will then generate an image that matches the description provided.

Stable Diffusion can also do Inpainting and Outpaiting features.

Who made Stable Diffusion?

Stability AI is a company that is building open AI tools in order to help humanity reach its potential. Their values are evident in the work of their team members, and they are constantly striving to improve their methods and techniques. The company has a strong focus on community, and their team of experts and leaders are constantly developing new ways to use AI, like the Stable Diffusion model.

Emad Mostaque is the founder of Stability AI. He is a former hedge fund manager who wants to bring the benefits of deep learning to the masses. He is the one who came up with the idea for Stable Diffusion, and his aim is to make it accessible to as many people as possible.

How can you run Stable Diffusion?

You can run Stable Diffusion on your own computer or server if it meets the requirements. Alternatively, you can use the DreamStudio web app or Colab Pro. Let’s have a look at these three alternatives:

1. Running Stable Diffusion in your browser with Dreamstudio.ai

You can generate images with Stable Diffusion by using the Dreamstudio web app. With Dreamstudio, you can create any image you can dream of. To use Dreamstudio.ai:

– Navigate to the Dreamstudio site.

– Create an account.

– Once you are in, input your text into the textbox at the bottom, next to the Dream button.

– Click on the Dream button once you have given your input to create the image.

– Your image will be generated within 5 seconds.

– You can download the image you created by clicking the download icon in the middle of the generated image.

– There are a couple of settings on the right side that you can tweak, like the resolution of the image or the number of images to be generated.

2. Running Stable Diffusion on Google Colab

You can run Stable Diffusion non-locally via Google Colab. However, the caveat is that you might need a Colab Pro account since the free version of Colab has slightly too little VRAM to generate images:

– Navigate to Google Colab.

– Create an account.

– Once you are in, input your text into the textbox at the bottom, next to the Dream button.

– Click on the Dream button once you have given your input to create the image.

– Your image will be generated within 5 seconds.

– You can download the image you created by clicking the download icon in the middle of the generated image.

– There are a couple of settings on the right side that you can tweak, like the resolution of the image or the number of images to be generated.

3. Running Stable Diffusion locally on your computer

Stable Diffusion is a text-to-image model that can be run on consumer-level GPUs. To run Stable Diffusion locally on your PC, you will need a GPU with at least 6GB of VRAM. The steps to running Stable Diffusion locally may seem a bit complicated, but they are actually easy to follow. With a little effort, you can get it done:

– Install Miniconda3.

– Install the Stable Diffusion files from GitHub.

– Install the latest checkpoints.

– Install the Git Installer.

– Run the code on your computer.

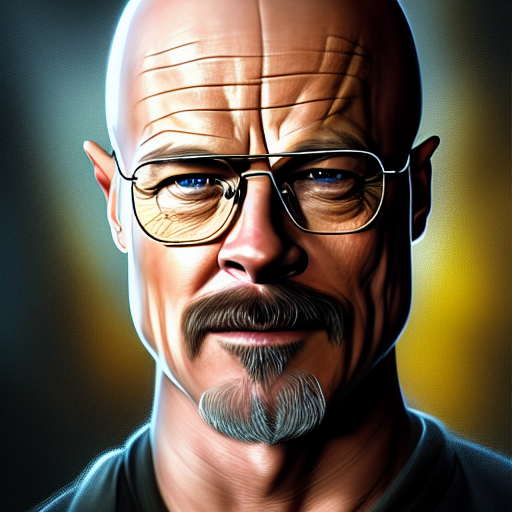

Stable Diffusion examples:

Conclusion with key takeaways:

The Stable Diffusion model is an open source AI tool that can be used to generate creative and coherent images from text prompts. The model is easy to use and can be run on most consumer-level GPUs.

Those who are interested in creating their own text-to-image generators will find Stable Diffusion to be an ideal choice.

Key takeaways:

– The Stable Diffusion model is an open source AI art tool that can generate remarkably coherent and creative images from a simple text prompt.

– The model is open source, meaning that anyone can view and modify the source code. Additionally, open source software is typically free to use.

– The Stable Diffusion model is best for digital art design and very creative and abstract drawings.

– The model is able to generate images that match the description provided in a text prompt.

– To use the Stable Diffusion model, you can either run it locally on your own computer or server, or use the DreamStudio web app.