Today, I am particularly excited to introduce you to the game-changing capabilities of GPT-4, specifically its larger context window. This breakthrough has propelled the model to new heights, enhancing its performance and unlocking a world of possibilities across various applications.

So, join me as we delve into the world of GPT-4 and discover why its expanded context window is revolutionizing the way we interact with and harness the power of LLM`s.

Read more or watch the YouTube video(Recommended)

YouTube:

What is the Context window in GPT-4?

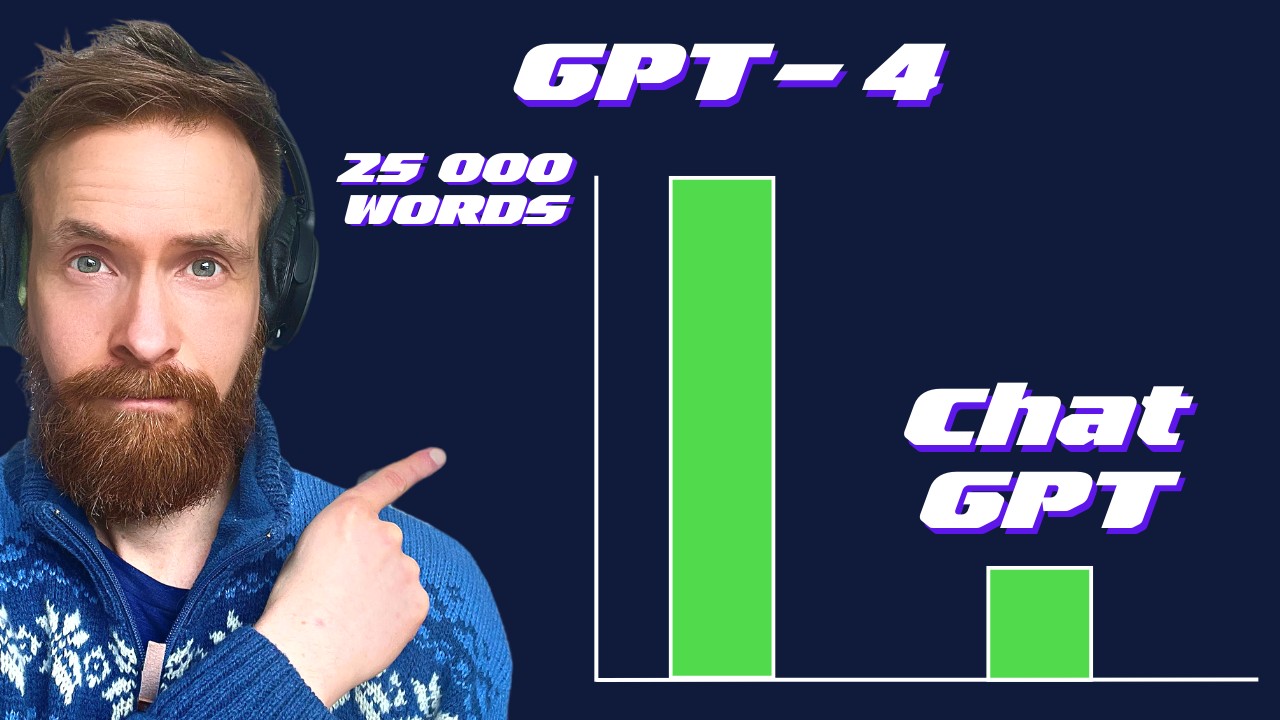

The context window in GPT-4 refers to the range of tokens or words the AI model can access and consider when generating responses to prompts or user inputs. The ability to process larger context windows is a significant improvement in GPT-4 over its predecessor, GPT-3, which was limited to 4000 tokens (approximately 3000 words). In the latest GPT-4 versions, the context window has been extended to 8000 tokens and even a staggering 32,000 tokens in the largest model, which equates to about 25,000 words.

This enhancement in context window size has a profound impact on the model’s performance and utility across various applications. With a larger context window, GPT-4 can now effectively handle more complex and lengthy inputs, such as processing entire documents, understanding the full scope of an article, or even creating content based on a broader set of information. This allows the AI to generate more accurate and contextually relevant responses by utilizing a more comprehensive understanding of the input.

The way the context window works is by maintaining a sliding window of tokens, with the most recent tokens always kept in focus. This means that as new tokens are added to the input, older tokens may fall outside the context window, and the AI will no longer be able to access them.

For example, if GPT-4’s 32K-token model is fed with a 6000-token input and receives a 4000-token response, the total token count becomes 10,000, which still falls within the model’s context window. However, if the total token count exceeds the model’s limit, it will lose access to some tokens, and the AI may fail to generate relevant responses based on the full context.

In summary, the context window in GPT-4 is a crucial aspect of the model’s ability to process and understand textual information. By extending the context window size in GPT-4, the AI can now work with larger and more complex inputs, leading to better performance and more versatile applications in various fields, such as content generation in GPT-4, language translation, and question-answering systems

Why is the larger context window in GPT-4 a game changer?

The larger context window in GPT-4 is a game-changer for several reasons. First and foremost, it allows the AI model to better understand and process lengthy, complex texts. This is particularly beneficial when working with more extensive documents, such as academic articles, legal contracts, or novels. As GPT-4 can now access a more significant portion of the text, it can generate responses or create content that is not only contextually relevant but also more accurate, comprehensive, and coherent.

For example, imagine a scenario where a user wants to generate a summary of a long research paper. With a smaller context window, the AI might struggle to grasp the entire scope of the paper, potentially missing out on critical information or presenting a fragmented and disjointed summary. However, with GPT-4’s larger context window, the model can effectively create prompts that analyze the entire document, ensuring that the generated summary captures the essence of the research paper, including its primary findings and conclusions.

Another area where the larger context window proves to be a game-changer is in the field of conversational AI. In the past, AI models like GPT-3 might have struggled to maintain context during extended conversations, leading to less coherent and relevant responses.

With GPT-4’s larger context window, the model can now store and process a more significant portion of the conversation, allowing it to maintain context and generate more engaging, coherent, and contextually appropriate responses, thus enhancing the overall prompt engineering user experience.

In summary, the larger context window in GPT-4 significantly elevates the model’s capabilities across a wide range of applications. By enabling the AI to process and understand more substantial and complex textual information, GPT-4 can generate more accurate and contextually relevant content, paving the way for improved conversational AI systems, better content generation, and enhanced performance in various other fields that rely on natural language processing.

New use cases with a larger GPT-4 context window

Here are some examples of new use cases and careers with the expansion of the GPT-4 context window:

- Customer Support:

- GPT-4’s improved comprehension enables highly capable virtual assistants

- Handles wide array of customer inquiries, including technical issues

- Saves customers time and reduces human support agents’ workload

- Revolutionizes company-customer interaction with personalized assistance

- Data Analysis and Decision-Making:

- GPT-4 generates insights from vast unstructured data (social media, news, reviews)

- Uncovers hidden patterns, trends, and correlations

- Example: Marketing teams analyze customer sentiment in real-time across platforms

- Policymakers use GPT-4 to analyze public opinion for informed decision-making

- Language Learning and Translation:

- GPT-4’s enhanced understanding bridges language barriers more effectively

- Assists learners in mastering grammar, vocabulary, and conversation skills

- Real-time translation services with better context awareness

- Facilitates smoother global communication and cultural exchange

- Creative Writing and Content Generation:

- GPT-4’s larger context window empowers more coherent and engaging content

- Assists authors with writer’s block, brainstorming, and editing

- Generates articles, blog posts, and marketing materials with rich, relevant context

- Boosts content creators’ productivity and expands their creative capabilities

Conclusion

I have closely followed the development of GPT-4. And I can confidently say that its larger context window is nothing short of revolutionary. It has opened doors to a plethora of new applications, ranging from customer support and data analysis to language learning and creative writing.

With improved comprehension, GPT-4 is not only making our lives easier but also connecting the world by bridging language barriers and fostering smoother global communication.

In an ever-evolving technological landscape, GPT-4’s expanded context window is undeniably a game-changer. It has set a new standard for AI models in natural language processing, and I’m genuinely excited to see how it continues to shape our future.

As we embrace GPT-4’s capabilities, we must also strive to use this technology responsibly and ethically, ensuring that the benefits are enjoyed by all while mitigating potential risks. Together, let’s harness the power of GPT-4 to create a brighter, more connected world.

How to Write a Great Story with GPT-4,

For those of you still sitting on the fence on if AI writing tools like ChatGPT is of benefit for you; this post is for you.

ChatGPT is designed to mimic human brains(kinda), whilst its still got a fair bit to go, it’s pretty damn powerful and today is the dumbest it will ever be which is crazy because its not dumb.

In the next 3-5 years (probably sooner) there will be a completely never seen before skill sets, one being prompt engineering.

This will be in huge demand because of something called suggestive speech patterns vs direct speech pattern.

I’d say around 80%+ of people fall into Suggestive speech and likely will never become proficient at direct speech.

Direct speech is exactly what you need to thrive with AI tools like ChatGPT.

Understanding how to talk to these tools is the make or break.

Here is the scary thing, this is going to disrupt the white collar industries more than any industry out there.

People are usually 5+ years behind trends. NOW is the time to learn how to speak to AI.

I’ve been using ai tools like chatGPT for the past 2 years, every single day. Not just for work but for personal life.

thanks for the information, it has helped me get a better understanding.