In my latest walkthrough, I embarked on an exploratory journey, merging the cutting-edge capabilities of GPT-4 Vision API with the innovative Dall-E 3 API.

What unfolded was nothing short of a creative dance between description and depiction, using machine learning to transform and evolve visual content. Let’s deep dive into how this process unfolded, the principles behind it, and what it heralds for the future of autonomous image generation.

Read more or watch the YouTube video(Recommended)

YouTube:

The Concept Behind The Image Evolution

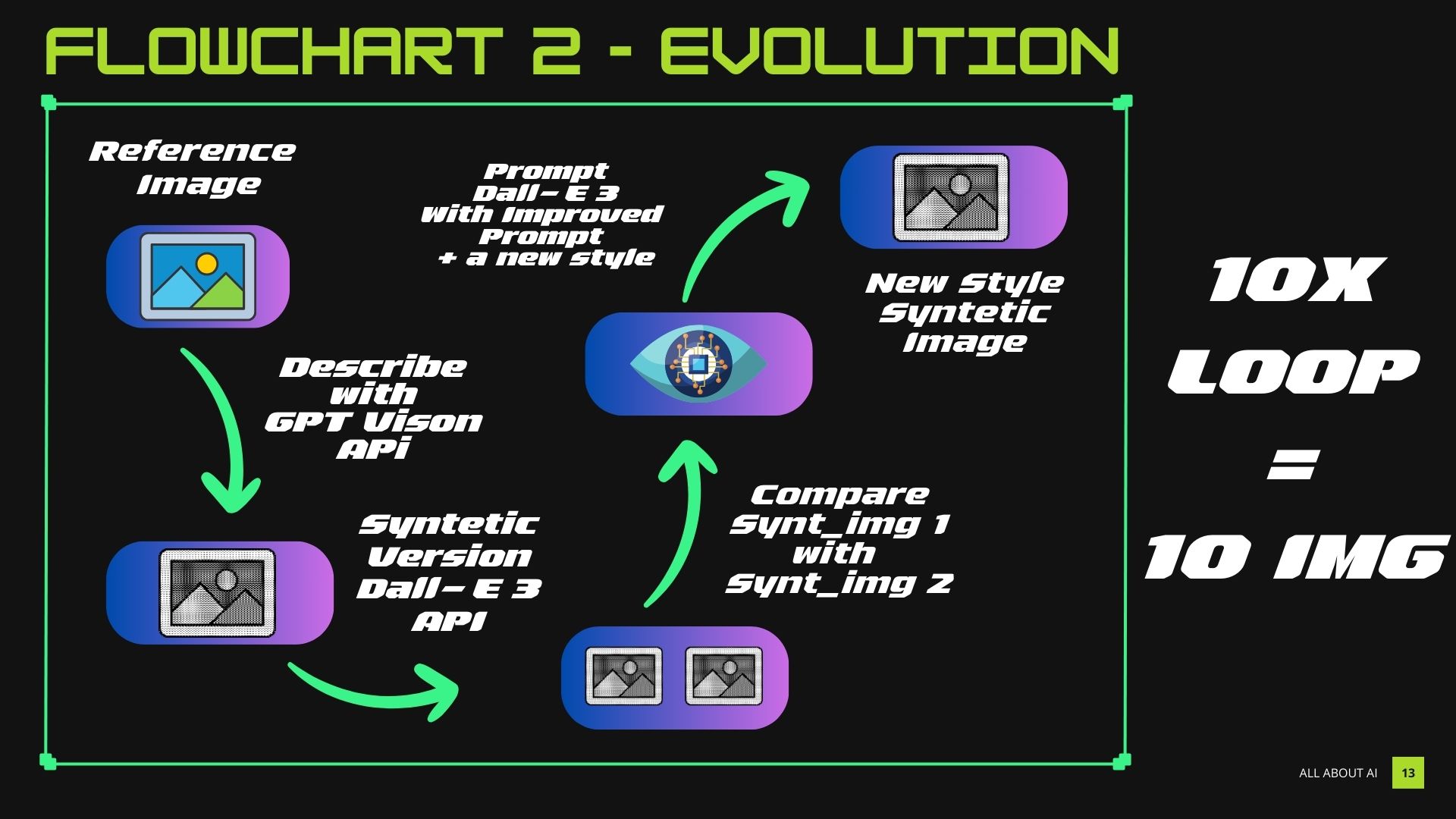

The goal was straightforward at first glance: To create a synthetic version of a given reference image, then iterate upon it. But beneath the surface, it’s a choreography of AI systems, each lending its perception and creativity to the output. Here’s how it goes:

- Starting with a Reference Image: We begin with an input – a reference image that serves as the baseline for all consecutive iterations.

- Descriptive Analysis with GPT-4 Vision API: The reference image is then fed into the GPT-4 Vision API. This state-of-the-art model analyzes the image and generates a detailed description, encompassing aspects like colors, features, theme, and style. This description effectively translates the visual into words, a format that becomes the foundation for the ensuing creative process.

- Synthetic Creation with Dall-E 3 API: Armed with this description, we prompt the Dall-E 3 API to generate a visual counterpart, creating a synthetic version of our original image.

- Iterative Comparison and Improvement: But we don’t stop there. The synthetic image is compared to our reference, and the GPT-4 Vision API goes to work again, this time offering a comparison and deriving an improved description.

- The Refinement Loop: This new description serves as the next prompt for Dall-E 3, continuing the refinement cycle. For my experiment, we looped this ten times, yielding ten progressively synthesized images.

Evolving Beyond the Original – The Evolution Variant

Beyond mere replication and refinement, I sought to imbue the process with an element of transformation. In the evolution variant of the experiment:

- Instead of continually referencing the original image, each new synthetic image is compared with the previous version.

- With each iteration, a new style is introduced, causing a visual evolution ranging from subtle metamorphosis to a drastic stylistic leap.

- This not only explores the range of the AI’s creative capacity but also illustrates a tangible trajectory from the original inspiration to a wholly transformed piece.

Practical Walkthrough: Merging Code with Creativity

Let’s peel back the layers of complexity and talk about the practical aspects of the experiment. First, we designed functions to articulate our communication with the APIs:

- Vision API Describe Image: Takes an image and uses the Vision API to generate a detailed description.

- Dall-E Generate Image: Uses the Dall-E 3 model to create an image based on the prompt received from GPT-4 Vision API.

- Vision API Compare and Describe: A dual-image input function that provides an improved prompt by comparing the reference and synthetic images.

Additionally, rate limits and sleep timers were set up to manage the frequency of API calls, which keeps our experiment within operational limits.

From Theory to Reality: The Experiments

To put the system to the test, we began with the iconic Iwo Jima flag-raising photograph as our reference. Through the loop, we saw the fidelity of the synthetic image improve dramatically, attesting to the system’s capability of high-recognition tasks.

Evolution with Breaking Bad’s Walter White

Switching to the evolution variant, we used a still of Walter White from Breaking Bad. The transformation was startling; the gas mask transformed, new elements like steampunk motifs crept in, and with each iteration, the image took on a life of its own, straying further from the original while keeping a semblance of its essence.

Creative Twists with a Personal Illustration

I also introduced a personal creation: a retro 90s illustration representing the Python programming language. Iterating through, each synthetic image diverged into distinct concepts, from mechanical keyboards to musical control panels, showcasing the power of the AI to create a lineage of creativity from a single reference point.

Conclusion

The marriage between GPT-4 Vision API and Dall-E 3 API is not just a technical feat; it’s a poetic symphony of algorithms interacting to create visual narratives that evolve independently. While there’s much room for optimization, particularly in honing the prompts and ironing out bugs, the potential is vivid and exciting.

Indeed, artificial intelligence is not just a tool; it’s a collaborator that pushes boundaries and expands horizons of creativity. As I wrap up this venture, I am thrilled to make the code available on GitHub for others to explore, tweak, and augment.

This is just a glimpse into the kaleidoscope of possibilities that AI can offer in the realm of autonomous image generation. Keep an eye out; the future is visually intelligent.

FAQ

Can you explain the step-by-step process involved in combining the GPT-4 Vision API with the Dall-E 3 API for autonomous image generation?

Sure, the process can be broken down into several key steps:

Start with an input image: Select an original reference image that you want the AI to recreate or iterate on.

Analyze with GPT-4 Vision API: Use the Vision API to analyze the image and produce a detailed description, capturing its essence in words.

Generate with Dall-E 3 API: Take the description provided by the Vision API and feed it into the Dall-E 3 API to create a visual representation based on the textual prompt.

Iterative Refinement: Continuously loop the process, providing the Dall-E 3 generated image back to the Vision API for comparison and refined description, which is then used for subsequent image generation by Dall-E 3. This can be repeated for multiple iterations.

What is a Large Multimodal Model (LMM)?

A Large Multimodal Model (LMM) is a type of AI model designed to process diverse types of data like text and images simultaneously. GPT-4 Vision falls under this category and is particularly skilled at providing a comprehensive understanding of both visual and textual data.

How can I access GPT-4 Vision?

As of the time of writing, GPT-4 Vision is accessible via a $20-per-month ChatGPT Plus subscription and via the API.

What is Dall-E 3?

Dall-E 3 is OpenAIs newest image generator, you can find it in ChatGPT or the Dall-E 3 API