In the fast-paced universe of artificial intelligence, the arrival of large multimodal models (LMMs) like GPT-4 Vision has created waves of excitement. With the ability to understand both text and images, GPT-4V is a marvel in the world of machine learning.

Today, we’ll peel back the layers of this fascinating technology and delve into some real-world examples to better understand its incredible use cases.

Read more or watch the YouTube video(Recommended)

YouTube:

What is a Large Multimodal Model (LMM)?

An Large Multimodal Model (LMM) is more than just a standard machine learning model. It’s an AI marvel engineered to process diverse data types—text, images, and potentially more—simultaneously. In the case of GPT-4 Vision, it is designed to comprehend both visual and textual data, making it incredibly versatile in applications that require a composite understanding of the world.

For instance, it can not only read the textual labels in an image but also interpret the image’s content to provide a comprehensive understanding of the subject.

What is GPT-4 Vision?

GPT-4 Vision, or GPT-4V, is an advanced AI model by OpenAI that can understand both text and images. It’s a multimodal large language model, meaning it can interpret various types of data. Whether you upload a photo of a meal and ask for a recipe, or seek to identify a plant, GPT-4V can provide insightful answers.

Developed using complex data and Reinforcement Learning from Human Feedback (RLHF), it’s a leap forward in AI capabilities. However, it’s still a work in progress and has some limitations. Accessible via a $20-per-month ChatGPT Plus subscription and the new GPT-4 Vision API from OpenAI.

Top 10 Use Cases of GPT-4 Vision

Here are just some of the use cases I have tested GPT-4V with so far, but this is just a drop in the ocean of what you can do with this amazing technology. I will also be exploring more around prompt engineering and GPT-4V going forward.

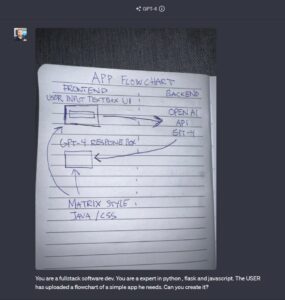

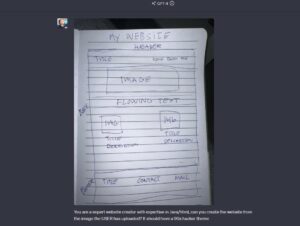

1. Transforming App Sketches into Code

Imagine having a simple hand-drawn app flowchart converted into a fully functional app. GPT-4 Vision can do exactly that. For instance, it can interpret sketches outlining frontend and backend components and generate a working Flask app with HTML, CSS, and JavaScript files. This isn’t just theoretical; I’ve seen it generate an app from a sketch that included frontend UI, backend API calls, and even styling elements.

2. Precision in Bead Counting

GPT-4V can analyze an image of a jar filled with beads and give a startlingly accurate estimate of how many beads are inside. For example, when given a photo of a jar containing 27,800 beads, the model’s first estimate was 27,000—a near-perfect guess! Although subsequent estimations varied, the precision of that first guess was mind-blowing.

3. Summarizing Video Content

Taking a screenshot from a complex YouTube video can now yield a detailed explanation of what the video covers. GPT-4 Vision can identify headings, subheadings, and even the subject being discussed by the host. It’s like having a real-time interpreter for complex video content.

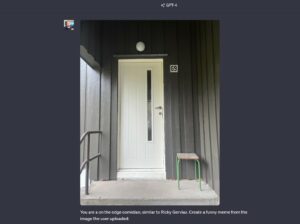

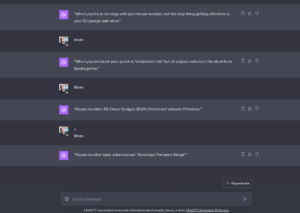

4. Crafting Memes with Wit

Humor and wit aren’t beyond the reach of GPT-4V. In one instance, an image of a front porch with the number 69 was transformed into a hilarious meme with the caption “House number says adventurous, stool says I’ve seen things.” The model understands the elements in the image and crafts jokes that resonate with human humor.

5. Expert Camping Advice

This model can also be your wilderness guide. Given images of a forest and a riverside, GPT-4V evaluated both locations’ pros and cons. It looked at natural shelter, proximity to water, and even flatness of the ground before recommending a hybrid location near the forest edge but close to the river.

6. Identifying Edible Plants

The model can identify edible plants, like a rosehip, and offer insights into their uses, such as making jams, jellies, or teas. It even cautions users to remove the inner seeds and hair from the rosehips, as they can cause irritation if ingested.

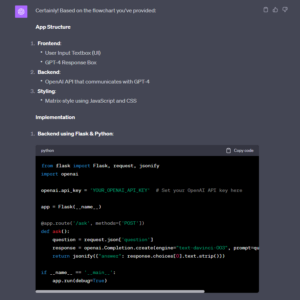

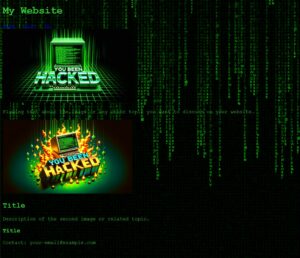

7. Web Development from Paper to Screen

From a simple sketch on paper to a working website, GPT-4 Vision can make it happen. For example, it interpreted a hand-drawn layout to create an HTML website complete with a ’90s hacker theme and Matrix rain effects. The model even coded a pop-up countdown that alerts visitors they’ll be “hacked” in 10 seconds!

8. Botanical Identification

Given an image of a flower, GPT-4 Vision can identify its species. For instance, it accurately identified a cranesbill flower in one of the examples, providing not just the name but also interesting botanical facts.

9. Elevating Your Fantasy Sports Strategy

For Fantasy Premier League (FPL) fans, GPT-4V can analyze team statistics and upcoming fixtures to recommend the best players for upcoming games. It even provides a small description of each fixture to help make informed decisions.

10. Tailored TV Show Recommendations

If you’re an ‘Office’ fan, GPT-4 Vision can recommend similar shows like ‘Parks and Recreation,’ ‘Brooklyn 99,’ and ’30 Rock’ based on a screenshot from the series. It’s like your own personalized TV guide.

Conclusion

GPT-4V is a technological wonder that is rewriting the rules of what AI can achieve. GPT-4V will for sure be a part of the AI-Engineer tech stack. Its ability to interpret both text and images makes it a game-changer in a variety of applications, from software development to daily convenience and entertainment.

The real-world examples we’ve discussed reveal the model’s astonishing versatility and precision. As we continue to explore its capabilities, one thing is clear: the future holds limitless possibilities with GPT-4 Vision at the helm.

FAQ

What is GPT-4 Vision?

GPT-4 Vision, or GPT-4V, is an advanced AI model developed by OpenAI that has the capability to understand both text and images. It’s a large multimodal model, meaning it can interpret various types of data, making it incredibly versatile for a range of applications

What is a Large Multimodal Model (LMM)?

A Large Multimodal Model (LMM) is a type of AI model designed to process diverse types of data like text and images simultaneously. GPT-4 Vision falls under this category and is particularly skilled at providing a comprehensive understanding of both visual and textual data.

How can I access GPT-4 Vision?

As of the time of writing, GPT-4 Vision is accessible via a $20-per-month ChatGPT Plus subscription. It is available on both iOS and Android platforms.

What are some real-world use cases for GPT-4 Vision?

GPT-4 Vision can be used for a variety of applications such as transforming hand-drawn app sketches into code, providing expert camping advice, identifying edible plants, and even crafting memes with wit. It is versatile and can be applied in diverse fields.