When you have two impressive iterations of language models, the immediate question that pops up is, “What happens when you combine them?” That’s exactly the rabbit hole I recently went down. I decided to experiment with fine-tuning a ChatGPT 3.5 model using synthetic data generated by its big sibling, GPT-4.

Is it possible to create a super ChatGPT? Or is it just a colossal waste of computational resources and time? Well, you’re in for a treat because I’ll take you through this intriguing experiment step-by-step.

Read more or watch the YouTube video(Recommended)

YouTube:

The Potential of Synthetic Datasets

Synthetic data isn’t new; it’s been used in various industries for simulations, testing, and training machine learning models. However, the concept of using synthetic data for fine-tuning chatbots, particularly advanced ones like ChatGPT, is relatively unexplored.

The primary benefit is that synthetic data can be tailored to specific needs, allowing for more controlled fine-tuning. In this case, the ‘tailoring’ is being done by none other than GPT-4, a model even more advanced than the one we’re trying to fine-tune.

Why use GPT-4?

GPT-4 is one of the latest and most advanced language models available, so it makes sense to leverage its capabilities for generating synthetic datasets. My hypothesis is that a dataset generated by GPT-4 would be sophisticated enough to provide meaningful fine-tuning to ChatGPT 3.5.

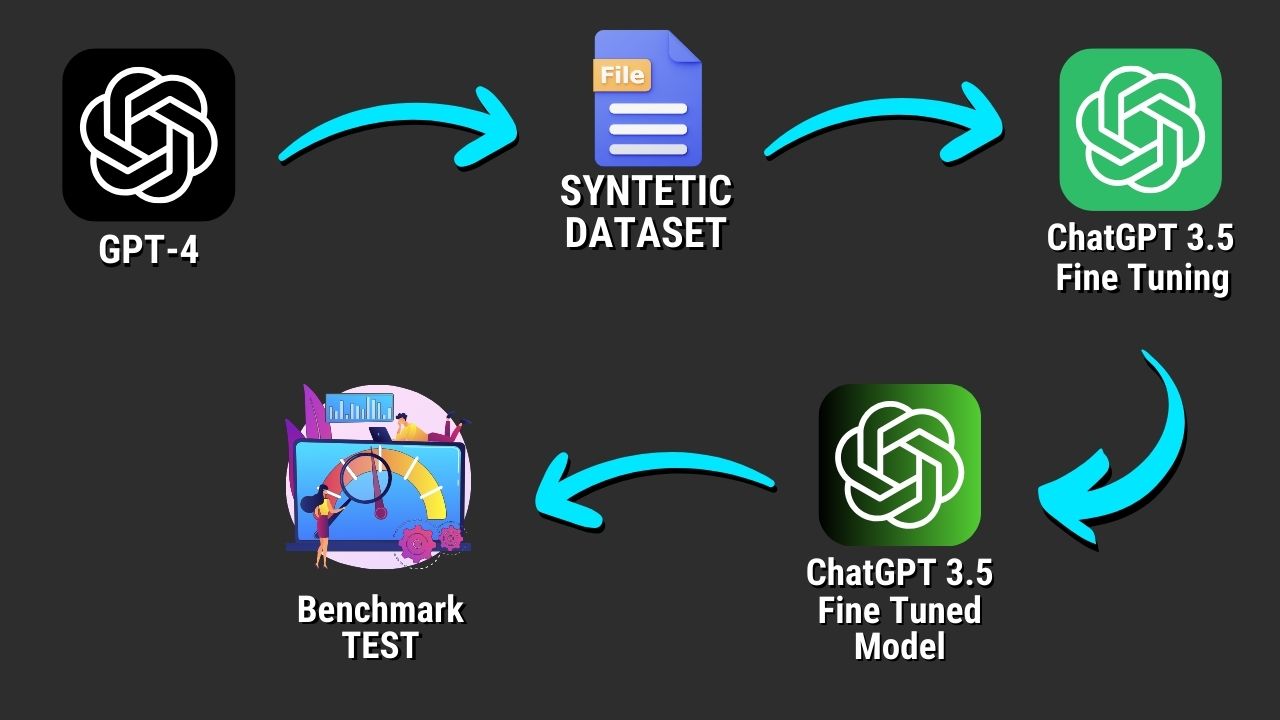

The Workflow

Step 1: Deciding the Dataset

First, I had to decide what kind of synthetic dataset I wanted GPT-4 to generate. I chose a mixture of riddles, math problems, and logical problems. Why? Because these types of problems are easy to benchmark and they provide a robust testing ground for both the original ChatGPT and the fine-tuned model.

Step 2: Automating Data Generation

I didn’t want to manually type in each problem into GPT-4. That would be incredibly time-consuming. So, I wrote a Python script that automates this task using some clever Prompt Engineering. This script uses OpenAI’s API, sends a problem to GPT-4, receives the generated response, and saves it in a structured form (JSONL) that’s suitable for fine-tuning.

Step 3: Fine-Tuning ChatGPT 3.5

Once the synthetic dataset was ready, it was time to fine-tune the ChatGPT 3.5 model. This involved running another Python script that uploads the dataset to OpenAI, creates a fine-tuning job, and monitors the fine-tuning process.

Step 4: Benchmarking

The final step was to benchmark the fine-tuned model against the vanilla ChatGPT and GPT-4. For this, I used a set of unique problems that were not part of the training data.

Chain of Thought Principles in Synthetic Datasets

What Is Chain of Thought Prompting?

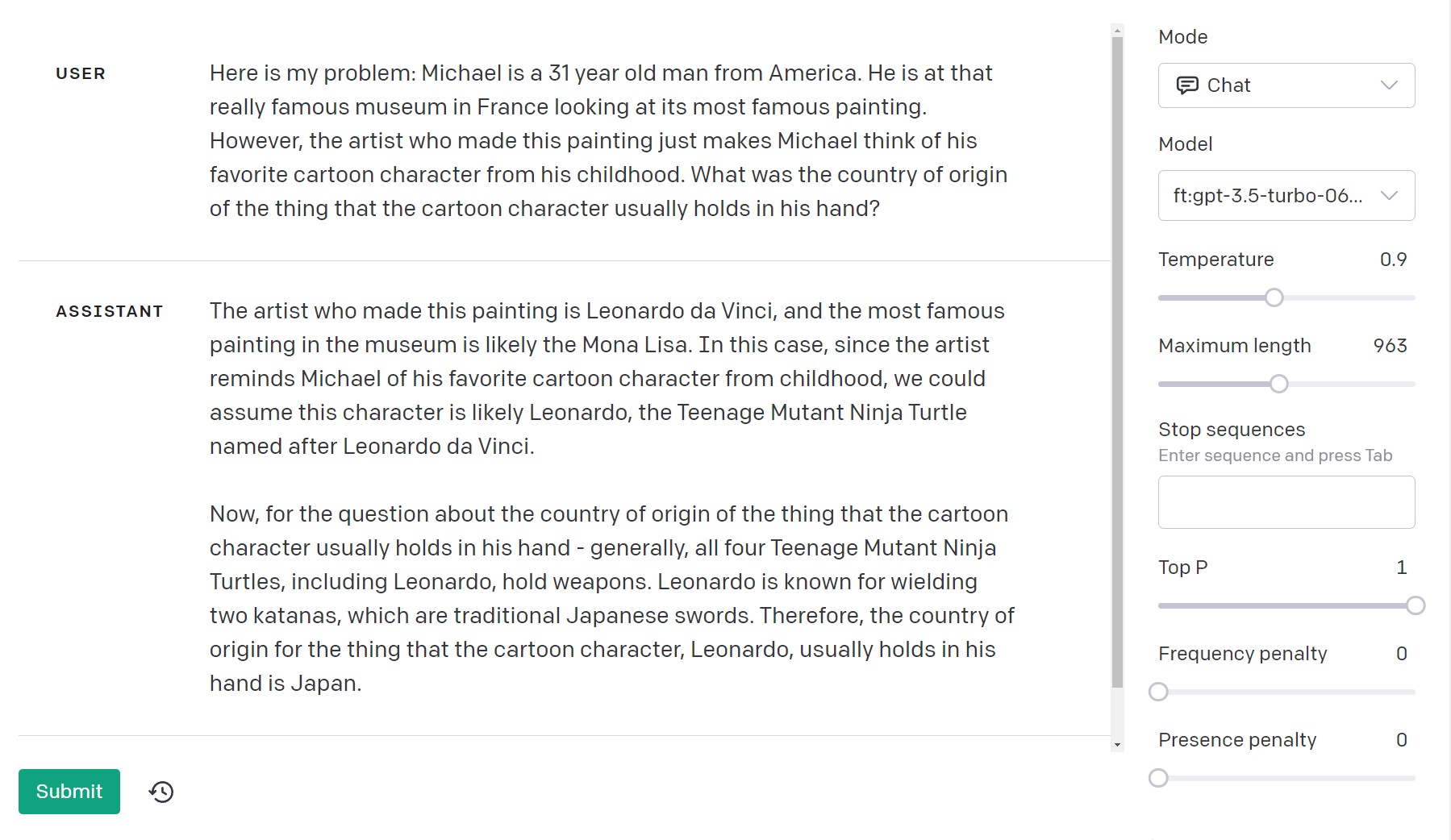

In Large Language Models like ChatGPT and GPT-4, prompt engineering plays a critical role. The Chain of Thought technique involves breaking down complex questions into smaller, manageable tasks, guiding the model through a structured pathway.

Why Use Chain of Thought?

Applying the Chain of Thought principle in generating synthetic data for training has multiple advantages:

- Improved Accuracy: The model handles each part of a complex problem with greater precision.

- Problem Decomposition: It makes complex problems easier to tackle.

- Handling Ambiguity: It allows the model to make educated guesses at each stage, leading to more reliable outcomes.

Chain of Thought in Action

Imagine a synthetic dataset designed to train ChatGPT 3.5 for medical diagnosis support. A straightforward prompt like, “Diagnose the symptoms of headache, nausea, and fatigue,” may result in vague or incorrect responses.

However, using Chain of Thought, the dataset can first ask the model to identify possible causes for each symptom separately and then integrate these into a final diagnosis. This makes the model more effective and reliable.

The Results

Synthetic Data Costs

Generating synthetic data using GPT-4 wasn’t cheap. For 170 examples, I spent $32. The fine-tuning cost was $3, bringing the total to $35. It’s a noteworthy investment, especially if you consider scaling up the number of examples.

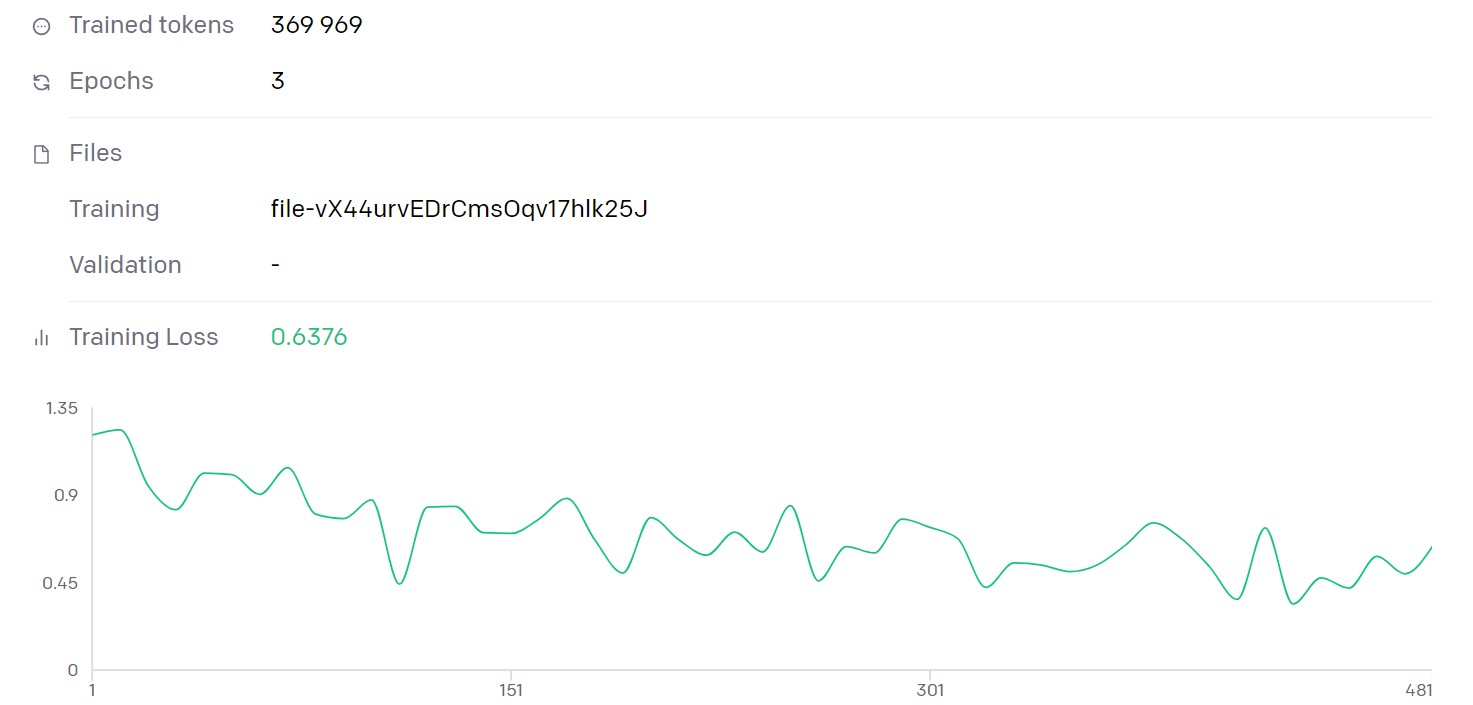

The Fine-Tuning Process

The fine-tuning process was intriguing. OpenAI’s new interface allows you to monitor metrics like training loss and epochs. My model started with a training loss of 1.2, which halved to 0.6 as it trained, indicating that the model was learning effectively.

Benchmarking Insights

Now, this was the fun part. My fine-tuned ChatGPT 3.5 model performed significantly better than the vanilla ChatGPT on the set of unique problems. It solved complex problems using a step-by-step approach, showing clear improvements in reasoning. However, it wasn’t as perfect as GPT-4 but showed potential.

Conclusion

Fine-tuning ChatGPT 3.5 with synthetic data generated by GPT-4 was a fascinating experiment that yielded promising results. It offered insights into the capabilities and limitations of both models and opened up avenues for future research and applications.

While the costs associated with generating synthetic datasets can be high, the potential improvements in performance could justify the investment for specific use-cases. It also raises interesting questions about the role of synthetic data in training future AI models.

In the end, the experiment left me with more questions than answers, and that’s the beauty of delving into the world of AI. It’s a constantly evolving field that never ceases to amaze. Will synthetic data be the future? Only time will tell, but for now, it’s safe to say that the future looks exciting.

FAQ

What is the main goal of fine-tuning ChatGPT 3.5 with GPT-4 synthetic datasets?

The main goal is to explore the potential of enhancing the performance of ChatGPT 3.5 by leveraging the advanced capabilities of GPT-4 through synthetic datasets

What is synthetic data?

Synthetic data refers to artificial data generated, often by algorithms or computer simulations, rather than being collected from real-world events.

Can you fine-tune ChatGPT with Synthetic Data?

Yes, it’s possible to fine-tune ChatGPT, specifically ChatGPT 3.5, using synthetic datasets. This blog post provides a step-by-step guide on how to achieve this using data generated by GPT-4.

How does Chain of Thought improve synthetic data training?

Using the Chain of Thought principle in synthetic data training offers improved accuracy, makes complex problems more approachable, and provides a structured method for the model to tackle ambiguous queries, leading to more reliable results.